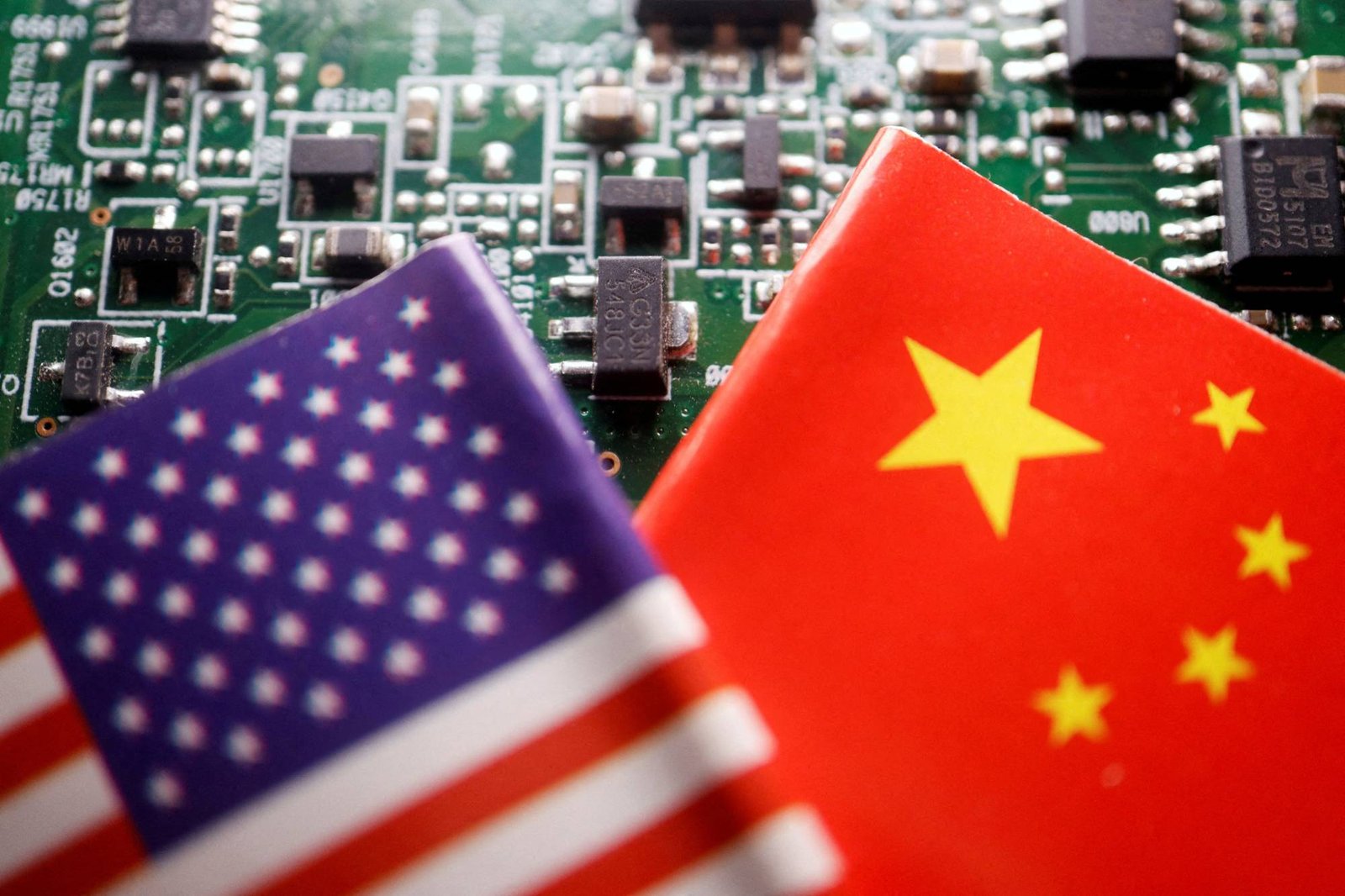

Last year, the U.S. imposed export controls on microchips in an attempt to restrict China’s technological advancement in supercomputers and artificial intelligence systems, such as ChatGPT and nuclear weapons development. However, these controls have had only minimal effects on China’s tech sector. The restrictions limited the shipment of chips from Nvidia and Advanced Micro Devices, which are commonly used in developing chatbots and other AI systems.

Despite this, Nvidia has developed variations of its chips specifically for the Chinese market, which are slowed down to comply with U.S. regulations. The newest version, the Nvidia H800, announced in March, is expected to take up to 30% longer to perform some AI tasks and could double some costs when compared with Nvidia’s fastest U.S. chips.

Nevertheless, the slower Nvidia chips still represent an improvement for Chinese firms. Tencent Holdings, one of China’s largest tech companies, has estimated that using Nvidia’s H800 will reduce the time it takes to train its largest AI system from 11 days to just four days.

Experts have observed that the “handicap” resulting from the slowed-down chips is relatively small and manageable. Charlie Chai, a Shanghai-based analyst with 86Research, said that AI companies he has spoken to see the restriction as a minor setback.

This situation highlights the challenge faced by the U.S. in slowing China’s progress in high tech without harming U.S. companies.

The U.S. had a strategic goal of preventing China from completely abandoning U.S. chips and increasing their own chip-development efforts. To achieve this, they had to establish boundaries, which presented the challenge of not being too disruptive immediately while also reducing China’s capability over time. According to an anonymous chip industry executive, the export restrictions were a necessary measure to accomplish this goal.

The export restrictions consisted of two parts. The first part limited the ability of chips to perform highly precise calculations, thereby limiting the development of supercomputers for military research. This measure was considered effective by chip industry sources. However, precision calculations are less relevant in AI work like large language models, where the chip’s ability to process vast amounts of data is more important.

Despite the restrictions, Nvidia is still able to sell the H800 to China’s biggest technology companies, including Alibaba Group Holding, Tencent, and Baidu, for use in AI applications. However, the company has not yet begun shipping the chips in large volumes. Nvidia stated in a recent statement that the government’s intention is not to harm competition or U.S. industry, and it still permits U.S. companies to supply products for commercial activities, like providing cloud services to consumers.

Nvidia, a U.S. technology company, considers China an important market for its products. Selling their goods in China not only benefits Nvidia but also creates job opportunities for their U.S.-based partners. However, Nvidia is facing export controls that require them to create products with a growing gap between the Chinese and U.S. markets. Despite this, the company is committed to complying with the regulations while offering competitive products in each market.

According to Bill Dally, Nvidia’s chief scientist, this gap will continue to widen rapidly as training requirements double every six to 12 months. The Bureau of Industry and Security, which oversees the regulations set by the U.S. Commerce Department, did not respond to a request for comment.

Slowed but not stopped

The limitation on chip-to-chip transfer speeds in the US is affecting AI because models used in technologies such as ChatGPT are too large to fit onto a single chip. This requires the models to be spread across multiple chips, often thousands, which must communicate with each other.

Nvidia has released a China-only H800 chip, but its performance details have not been publicly disclosed. However, a specification sheet obtained by Reuters reveals that its chip-to-chip speed is only 400 gigabytes per second, which is less than half the peak speed of Nvidia’s flagship H100 chip available outside China, which boasts a speed of 900 gigabytes per second.

Despite the lower speed of the H800 chip, some experts in the AI industry believe it is still sufficient. Naveen Rao, CEO of MosaicML, a startup that specializes in optimizing AI models for limited hardware, estimates that there will only be a 10%-30% slowdown in the system.

Rao further stated that there are ways to overcome this limitation algorithmically and does not see this as a significant barrier for at least the next decade.

Although a chip in China may take twice as long as a faster U.S. chip to complete an AI training task, money can still help get the job done. However, the cost of training such models can increase significantly from $10 million to $20 million, as confirmed by an anonymous industry source. While this may seem like a setback, it is not insurmountable for companies like Alibaba or Baidu.

Additionally, AI researchers are attempting to reduce the size of their massive systems to minimize the cost of training models similar to ChatGPT. This would require fewer chips, reducing chip-to-chip communications, and minimizing the impact of U.S. speed restrictions.

According to Cade Daniel, a software engineer at San Francisco-based startup Anyscale, two years ago, the industry believed that AI models would grow larger and larger. However, with efforts to reduce model size, the impact of export restrictions is noticeable but not as devastating as it could have been.